Award Winning Injury Attorney

Award Winning Customer Service

Let’s start with who we are NOT. This helps set us apart from many local Personal Injury law firms.

- We are not the biggest PI firm. But there are benefits to working with smaller firms.

- We do not just settle cases without pushing for more. Many firms often try to settle as quickly as possible to move through cases quickly and make money faster. Sometimes in their haste to settle, they miss important key details.

- We aim to offer and do better than the legal standard. We want to raise the bar on service.

- We do not spend millions on billboards, bus wraps, TV, or traditional law firm marketing media.

Accident Lawyer AZ Service Areas

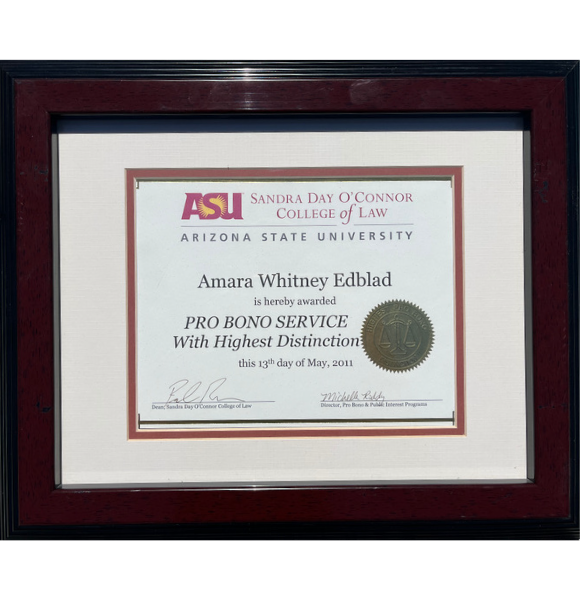

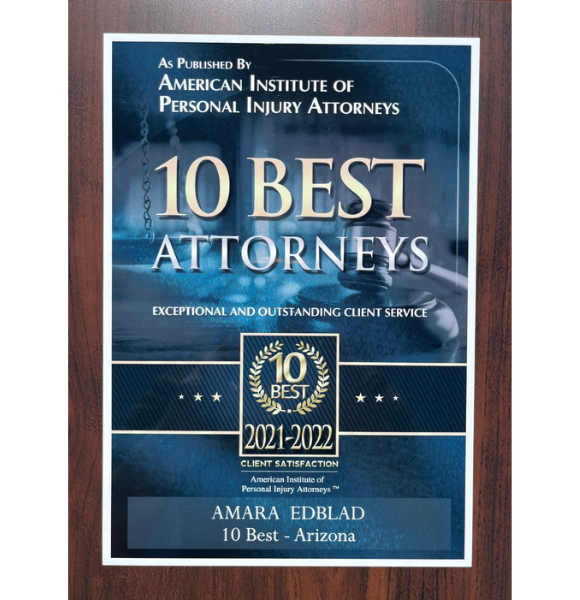

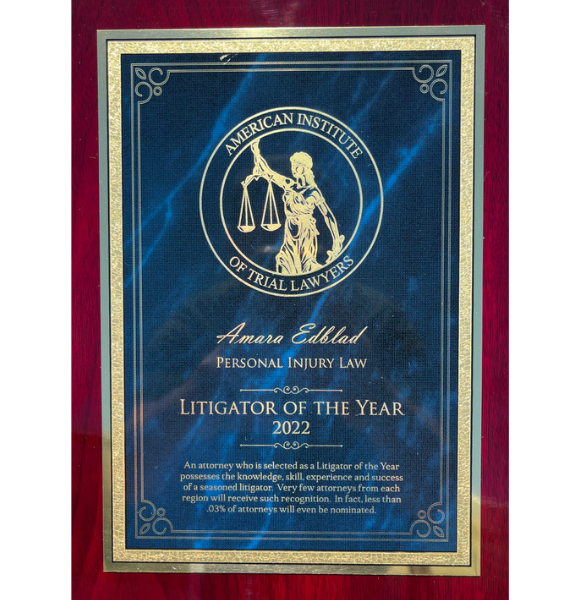

Amara’s Awards

We Don’t Get Paid Unless You Win

We work purely on a contingency fee. That means if we don’t get a settlement from the insurance company for you, we don’t get paid. There is no risk to you.

The Highest Quality Service

Amara Edblad has 12 years experience as an attorney and over a decade of experience practicing exclusively Personal Injury law in Arizona.

During that time she has received over a dozen accolades including “Top Personal Injury Litigator of the Year” in 2022 from the American Institute of Trial Lawyers and Nationally ranked as a Top 10 attorney for the last 3 years from 2020 to 2022 by the National Academy of Personal Injury Attorneys.

Amara has been lauded for her responsiveness, communication and empathy with clients, and overall talent as an Attorney.

Experienced Staff

We don’t spend millions on billboards, we invest our money in the people that make our company great. Amara & Associates may be new but the people that make Amara & Associates have been working together for years; some for almost a decade.

Our team is knowledgable, compassionate, and committed to helping you after your injury.